Yeah it sounds easy and cheap and evrything was done instantly. LMAO

How much does linius cost and how instant and easy is linius?

The Price

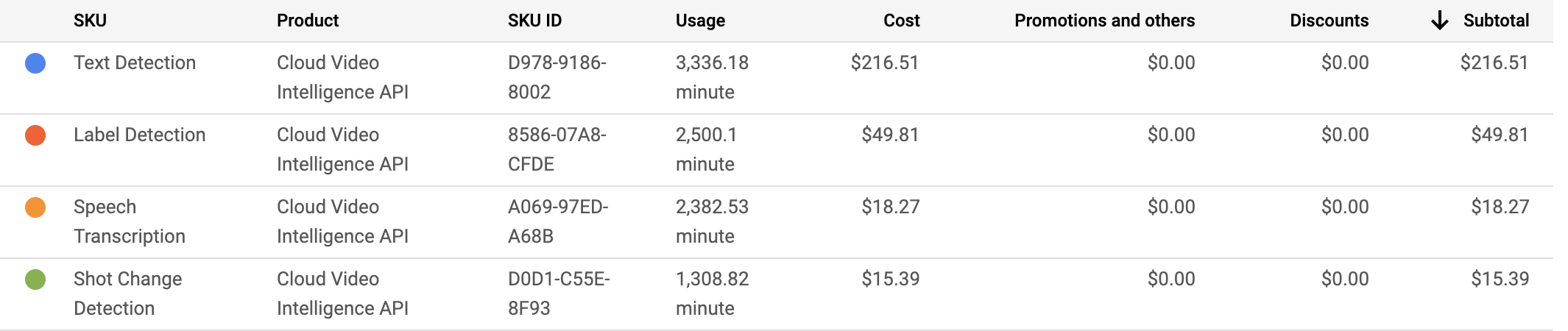

If you’re me, your first thought is,sure, but I bet it’s super expensive.I analyzed 126 GB of video or about 36 straight hours, and my total cost using this API was $300, whichwaskind of pricey. Here’s the cost breakdown per type of analysis:

I was surprised to learn that the bulk of the cost came from one single type of analysis–detecting on-screen text. Everything else amounted to just ~$80, which is funny, because on-screen text was the least interesting attribute I extracted! So a word of advice: if you’re on a budget, maybe leave this feature out.

Now to clarify, I ran the Video Intelligence APIoncefor every video in my collection. For my archive use case, it’s just an upfront cost, not a recurring one.

Using the API

Using the Video Intelligence API is pretty straightforward once you’ve got your data uploaded to aCloud Storage Bucket. (Never heard of a Storage Bucket? It’s basically just a folder stored in Google Cloud.) For this project, the code that calls the API lives invideo_archive/functions/index.jsand looks like this:

constvideoContext={speechTranscriptionConfig:{languageCode:'en-US',enableAutomaticPunctuation:true,},};constrequest={inputUri:`gs://VIDEO_BUCKET/my_sick_video.mp4`,outputUri:`gs://JSON_BUCKET/my_sick_video.json`,features:['LABEL_DETECTION','SHOT_CHANGE_DETECTION','TEXT_DETECTION','SPEECH_TRANSCRIPTION',],videoContext:videoContext,};constclient=newvideo.v1.VideoIntelligenceServiceClient();// Detects labels in a videoconsole.log(`Kicking off client annotation`);const[operation]=awaitclient.annotateVideo(request);console.log('operation',operation);- One line 1, we create avideoContextwith some configuration settings for the API. Here we tell the tool that audio tracks will be in English (en-US).

- One line 8, we create a request object, passing the path to our video file asinputUri, and the location where we’d like the results to be written asoutputUri. Note that the Video Intelligence API will write the data asjsonto whatever path you specify, as long as its in a Storage Bucket you have permission to write to.

- On line 12, we specify what types of analyses we’d like the API to run.

- On line 24, we kick off a video annotation request. There are two ways of doing this, one by running the function synchronously and waiting for the results in code, or by kicking off a background job and writing the results to a json file. The Video Intelligence API analyses videos approximately in real time, so a 2 minute video would take about 2 minutes to analyze. Since that’s kind of a long time, I decided to use the asynchronous function call here.

If you want to play with this API quickly on your own computer, try outthis samplefrom the official Google Cloud Node.js sample repo.

The Response

When the API finishes processing a video, it writes its results as json that looks like this:

{"annotation_results":[{"input_uri":"/family_videos/myuserid/multi_shot_test.mp4","segment":{"start_time_offset":{},"end_time_offset":{"seconds":70,"nanos":983000000}},"segment_label_annotations":[{"entity":{"entity_id":"/m/06npx","description":"sea","language_code":"en-US"},"segments":[{"segment":{"start_time_offset":{},"end_time_offset":{"seconds":70,"nanos":983000000}},"confidence":0.34786162}]},{"entity":{"entity_id":"/m/07bsy","description":"transport","language_code":"en-US"},"segments":[{"segment":{"start_time_offset":{},"end_time_offset":{"seconds":70,"nanos":983000000}},"confidence":0.57152408}]},{"entity":{"entity_id":"/m/06gfj","description":"road","language_code":"en-US"},"segments":[{"segment":{"start_time_offset":{},"end_time_offset":{"seconds":70,"nanos":983000000}},"confidence":0.48243082}]},{"entity":{"entity_id":"/m/015s2f","description":"water resources","language_code":"en-US"},"category_entities":[{"entity_id":"/m/0838f","description":"water","language_code":"en-US"}],"segments":[{"segment":{"start_time_offset":{},"end_time_offset":{"seconds":70,"nanos":983000000}},"confidence":0.34592748}]}]}]}The response also contains text annotations and transcriptions, but it’s really large so I haven’t pasted it all here! To make use of this file, you’ll need some code for parsing it and probably writing the results to a database. You can borrowmy codefor help with this. Here’s what one of my functions for parsing the json looked like:

/* Grab image labels (i.e. snow, baby laughing, bridal shower) from json */functionparseShotLabelAnnotations(jsonBlob){returnjsonBlob.annotation_results.filter((annotation)=>{// Ignore annotations without shot label annotationsreturnannotation.shot_label_annotations;}).flatMap((annotation)=>{returnannotation.shot_label_annotations.flatMap((annotation)=>{returnannotation.segments.flatMap((segment)=>{return{text:null,transcript:null,entity:annotation.entity.description,confidence:segment.confidence,start_time:segment.segment.start_time_offset.seconds||0,end_time:segment.segment.end_time_offset.seconds,};});});});}Building a Serverless Backend with Firebase

To actually make the Video Intelligence API into a useful video archive, I had to build a whole app around it. That required some sort of backend for running code, storing data, hosting a database, handling users and authentication, hosting a website–all the typical web app stuff.

For this I turned to one of my favorite developer tool suites,Firebase. Firebase is a “serverless” approach to building apps. It provides support for common app functionality–databases, file storage, performance monitoring, hosting, authentication, analytics, messaging, and more–so that you, the developer, can forgo paying for an entire server or VM.

If you want to run my project yourself, you’ll have to create your own Firebase account and project to get started (it’s free).

I used Firebase to run all my code usingCloud Functions for Firebase. You upload a single function or set of functions (in Python or Go or Node.js or Java) which run in response to events—an HTTP request, a Pub/Sub event, or (in this case) when a file is uploaded to Cloud Storage.

You can take a look at my cloud functions inthis file. Here’s an example of how you run a Javascript function (in this case,analyzeVideo), every time a file is uploaded toYOUR INPUT VIDEO BUCKET.

constfunctions=require('firebase-functions');exports.analyzeVideo=functions.storage.bucket(YOUR_INPUT_VIDEO_BUCKET).object().onFinalize(async(object)=>{awaitanalyzeVideo(object);});Once you’ve installed theFirebase command line tool, you can deploy your functions, which should be written in a file calledindex.js, to the cloud from the command line by running:

firebase deploy --only functions

I also used Firebase functions to later build a Search HTTP endpoint:

/* Does what it says--takes a userid and a query and returns relevant video data */exports.search=functions.https.onCall(async(data,context)=>{if(!context.auth||!context.auth.token.email){// Throwing an HttpsError so that the client gets the error details.thrownewfunctions.https.HttpsError('failed-precondition','The function must be called while authenticated.',);}consthits=awaitutils.search(data.text,context.auth.uid);return{'hits':hits};});- On line 3, I usefunctions.https.onCallto register a new Firebase function that’s triggered when an HTTPS GET request is made.

- On line 4, I check to see if the user that called my HTTPS endpoint is authenticated and has registered with an email address.Authenticationis easy to set up with Firebase, and in my project, I’ve enabled it with Google login.

- On line 12, I call my search function, passing the useridcontext.auth.uidthat Firebase generates when a new user registers and that’s passed when they hit my endpoint.

- On line 13, I return search results.